From Kernel WASM to User-Space Policy Evaluation: Lessons Learned at Riptides

Introduction

At Riptides, we're building a platform that provides seamless kernel-based non-human identity (NHI) with SPIFFE and kTLS, delivering deep socket-level security and real-time policy enforcement inside the Linux kernel. One of our core challenges has been determining the optimal architecture for policy evaluation - specifically, where and how to run Open Policy Agent (OPA) policies that govern socket connections in real-time.

This is the story of our journey from an ambitious kernel-space WASM implementation to a pragmatic user-space solution, and the hard-earned lessons we learned along the way.

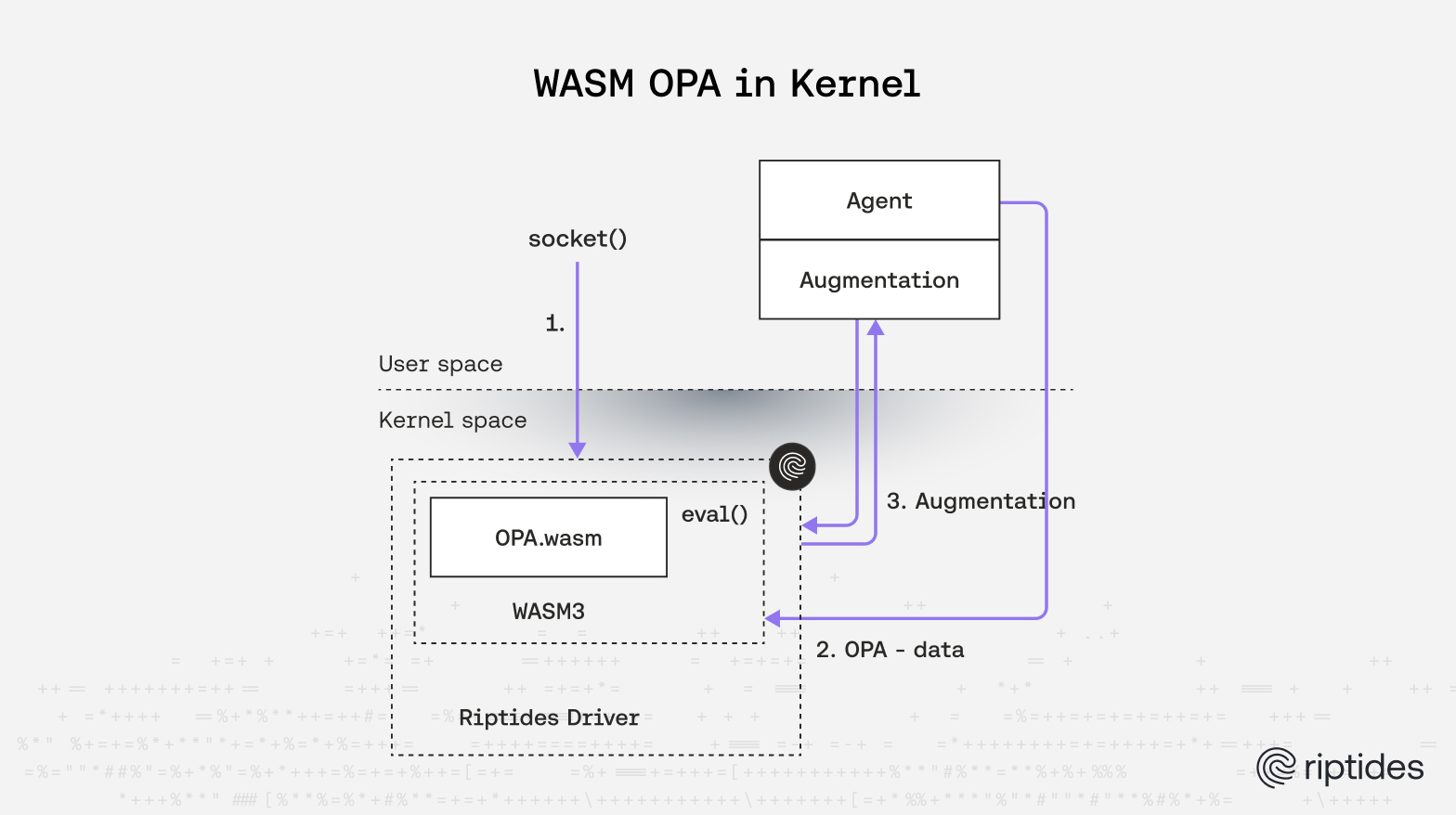

The Initial Vision: WASM in the Kernel

When we first architected the Riptides platform, we had a bold vision: evaluate OPA policies directly in kernel space using a WebAssembly runtime. We thought this could work as an eBPF alternative of sorts, providing a more flexible and powerful way to run policy logic in kernel space. The reasoning seemed sound:

- Ultra-low latency: Policy evaluation would happen at the kernel level without context switches

- Isolation: WASM provides sandboxing for untrusted policy code

- Flexibility: Policies could be updated without kernel module changes

- Performance: No serialization overhead between kernel and userspace

- eBPF alternative: More expressive than eBPF for complex policy logic while maintaining kernel-space execution

To make this vision reality, we forked the wasm3 WebAssembly runtime and began the challenging work of porting it to run in Linux kernel space. For those interested in the technical details, our kernel port is available at github.com/riptideslabs/wasm3-kernel. Also, this wasn't the first Wasm kernel journey in history, see: https://github.com/wasmerio/kernel-wasm

The Technical Journey

Porting wasm3 to Kernel Space

The wasm3 runtime was an attractive choice for kernel porting because:

- It's designed to be lightweight and embeddable

- Uses an interpreter rather than JIT compilation (safer and simpler in kernel context)

- Has minimal external dependencies

- Relatively small codebase

However, porting any userspace runtime to kernel space presents significant challenges, memory management seemed like an easy one:

// Example of kernel-space WASM memory management

static void* wasm_kernel_malloc(size_t size) {

return kmalloc(size, GFP_KERNEL);

}

static void wasm_kernel_free(void* ptr) {

kfree(ptr);

}

// Replaced standard library functions with kernel equivalents

#define malloc(size) wasm_kernel_malloc(size)

#define free(ptr) wasm_kernel_free(ptr)

We had to:

- Replace all standard library calls with kernel equivalents

- Implement custom memory management for WASM linear memory

- Handle stack overflow protection in kernel context

- Ensure atomic operations were kernel-safe

- Remove floating-point operations (not allowed in some kernel contexts)

The floating-point removal was particularly challenging. We had to modify wasm3 to handle the absence of floating-point operations, but many OPA-compiled WASM modules contained floating-point operations for numeric computations. While OPA's WASM compilation generally produces efficient code, certain Rego built-in functions like mathematical operations, JSON number parsing, or comparison functions could generate floating-point instructions. This incompatibility between our floating-point-free kernel environment and the OPA WASM compilation output became a significant source of policy evaluation failures, especially for policies that performed numeric operations or worked with JSON data containing decimal numbers.

Integrating OPA Policies

Once we had wasm3 running in the kernel, we integrated it with OPA policy evaluation. OPA provides the ability to compile Rego policies into WASM modules using opa build -t wasm, which creates standalone WASM executables that can be loaded into any WASM runtime:

// Simplified policy evaluation in kernel module

static int evaluate_socket_policy(riptides_socket *s, const char *policy_wasm_data, size_t wasm_size) {

wasm3_runtime *runtime = get_wasm_runtime();

// Load OPA-compiled policy WASM module

wasm3_module *policy_module = wasm3_load_module(runtime, policy_wasm_data, wasm_size);

// Prepare socket context as JSON input

char input_json[512];

snprintf(input_json, sizeof(input_json),

"{"

"\"source_ip\":\"%s\","

"\"destination_ip\":\"%s\","

"\"destination_port\":%d,"

"\"process_name\":\"%s\""

"}",

s->src_addr, s->dst_addr, s->dst_port, current->comm);

// Allocate memory in WASM module and copy input

uint32_t input_ptr = wasm3_call_function(policy_module, "opa_malloc", strlen(input_json) + 1);

memcpy(wasm3_get_memory(runtime, input_ptr), input_json, strlen(input_json) + 1);

// Parse JSON input in WASM module

uint32_t parsed_input = wasm3_call_function(policy_module, "opa_json_parse", input_ptr, strlen(input_json));

// Create evaluation context and set input

uint32_t ctx = wasm3_call_function(policy_module, "opa_eval_ctx_new");

wasm3_call_function(policy_module, "opa_eval_ctx_set_input", ctx, parsed_input);

// Evaluate policy

wasm3_call_function(policy_module, "eval", ctx);

// Get result

uint32_t result_addr = wasm3_call_function(policy_module, "opa_eval_ctx_get_result", ctx);

uint32_t result_json = wasm3_call_function(policy_module, "opa_json_dump", result_addr);

// Parse result - OPA returns [{"result": true/false}] for allow/deny policies

const char *result_str = wasm3_get_memory(runtime, result_json);

int decision = (strstr(result_str, "\"result\":true") != NULL) ? POLICY_ALLOW : POLICY_DENY;

// Cleanup

wasm3_call_function(policy_module, "opa_free", input_ptr);

return decision;

}Early Success

Initially, the system worked beautifully! The OPA WASM compilation workflow was straightforward:

# Compile Rego policy to WASM

opa build -t wasm -e riptides/socket/allow policy.rego

# Load the resulting bundle into our kernel module

echo "load $(base64 policy.wasm)" > /dev/riptides

We could:

- Compile standard Rego policies to WASM using

opa build -t wasm - Load the compiled WASM modules into the kernel via our device driver

- Evaluate policies for socket connections with microsecond latency

- Update policies by simply recompiling and reloading WASM modules

- Maintain strong isolation between policy code and kernel code

The performance numbers were impressive - policy evaluation took less than 10 microseconds in most cases, and the WASM modules were compact (typically 50-200KB for complex policies).

The Reality Check: When WASM in Kernel Goes Wrong

However, as we moved from proof-of-concept to production-ready code, we encountered a series of challenges that ultimately led us to reconsider our approach.

Memory Management Nightmares

WASM linear memory management in kernel space proved problematic:

// This became a source of kernel panics

static int wasm_grow_memory(wasm3_runtime *runtime, uint32_t pages) {

size_t new_size = pages * WASM_PAGE_SIZE;

// vmalloc for large allocations, but this can fail under memory pressure

void *new_memory = wasm_kernel_malloc(new_size);

if (!new_memory) {

return -ENOMEM; // This could panic the kernel

}

// Copy existing memory... potential for corruption here

memcpy(new_memory, runtime->memory, runtime->memory_size);

wasm_kernel_free(runtime->memory);

runtime->memory = new_memory;

runtime->memory_size = new_size;

return 0;

}Issues we encountered:

- Memory fragmentation: Large WASM linear memory allocations could fragment kernel memory

- OOM conditions: Policy evaluation could trigger out-of-memory conditions that panic the kernel

- Memory leaks: Complex WASM module lifecycle management led to subtle memory leaks

- Stack overflow: Recursive policy evaluation could overflow kernel stacks

Debugging Complexity

Debugging WASM execution in kernel space was extremely challenging:

- No standard debugging tools worked

- Kernel panics provided minimal context about WASM execution state

- Policy bugs could crash the entire system

- Difficult to distinguish between wasm3 bugs, our porting bugs, and policy bugs

Security Concerns

While WASM provides isolation, running it in kernel space introduced new attack vectors:

- Bugs in the WASM runtime could compromise kernel security

- Poorly written policies could potentially exploit kernel interfaces

- The attack surface of the kernel was significantly increased

- Fuzzing and security testing became much more complex

Maintenance Burden

Keeping our wasm3 fork in sync with upstream while maintaining kernel compatibility proved unsustainable:

- Every wasm3 update required careful porting work

- Kernel API changes affected our runtime integration

- Supporting multiple kernel versions became a nightmare

- The codebase became increasingly divergent from upstream wasm3

- Upstream wasm3 became basically unmaintained during the time

The Pivot: Moving to User-Space

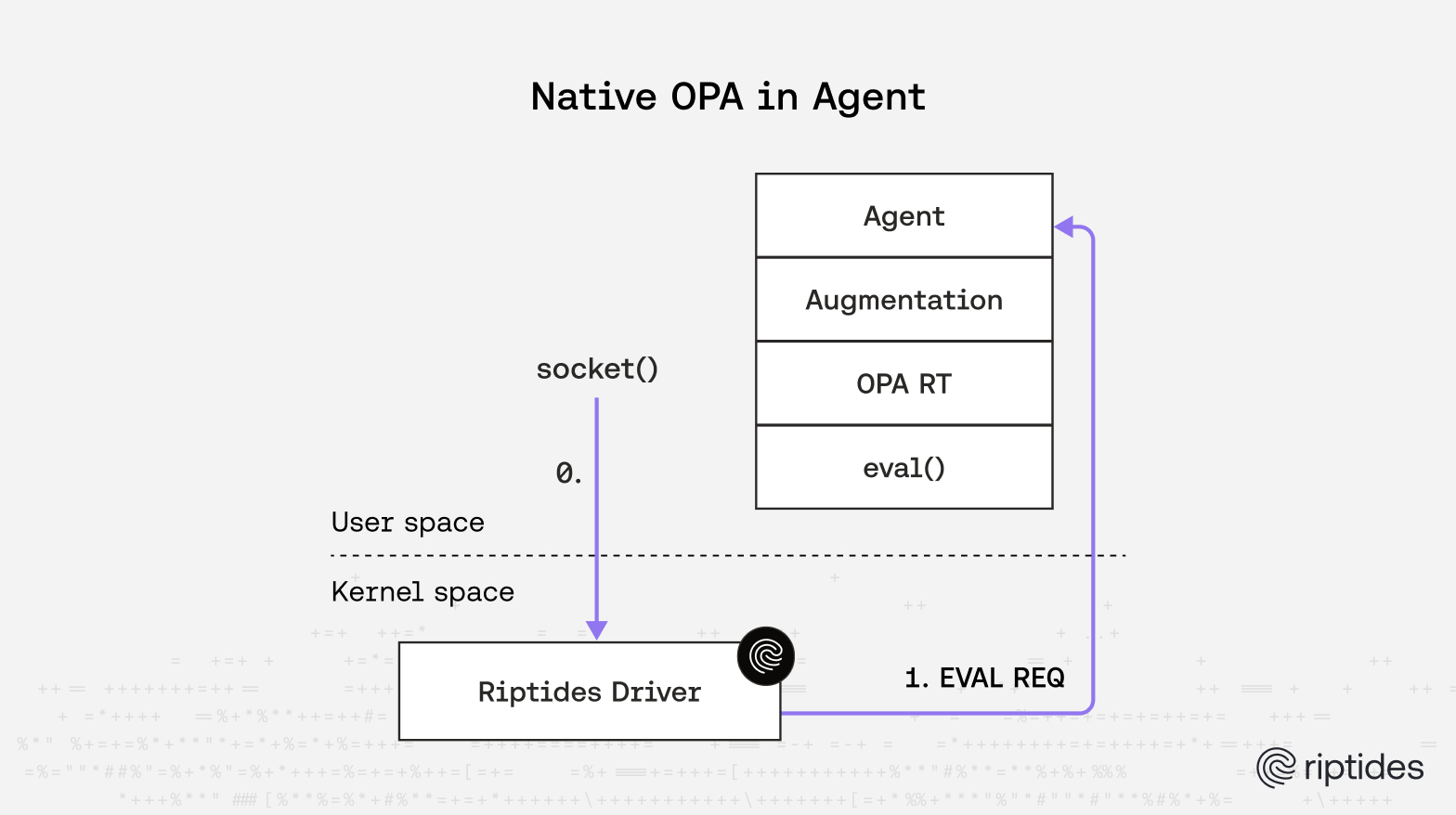

After months of fighting these issues, we made the difficult decision to move policy evaluation out of the kernel and into our Go-based agent process.

New Architecture

The new architecture looks like this:

Protocol Buffer Communication

We use protocol buffers with nanopb for efficient kernel-userspace communication. The idea of using protobuf for kernel-userspace communication isn't entirely new - NetBSD explored this concept and demonstrated its feasibility in their 2015 EuroBSDCon presentation, showing that structured serialization protocols can work well in kernel contexts.

// policy_request.proto

message PolicyEvaluationRequest {

string source_ip = 1;

string destination_ip = 2;

uint32 destination_port = 3;

string process_name = 4;

string hostname = 5;

map<string, string> labels = 6;

}

message PolicyEvaluationResponse {

enum Decision {

DENY = 0;

ALLOW = 1;

}

Decision decision = 1;

string reason = 2;

uint32 cache_ttl = 3;

}Kernel-Side Implementation

// Kernel module policy evaluation

static int evaluate_connection_policy(riptides_socket *s) {

// Check cache first

cached_decision *cached = lookup_policy_cache(s);

if (cached && !cache_expired(cached)) {

return cached->decision;

}

// Prepare protobuf request

PolicyEvaluationRequest request = PolicyEvaluationRequest_init_zero;

encode_socket_context(s, &request);

// Send to user-space agent via device file

PolicyEvaluationResponse response;

int ret = send_policy_request(&request, &response);

if (ret == 0) {

// Cache the result

cache_policy_decision(s, &response);

return response.decision;

}

// Default to deny on communication failure

return POLICY_DENY;

}User-Space Agent Implementation

// Go agent policy evaluation

func (a *Agent) EvaluatePolicyRequest(req *PolicyEvaluationRequest) *PolicyEvaluationResponse {

// Prepare OPA input

input := map[string]interface{}{

"source_ip": req.SourceIp,

"destination_ip": req.DestinationIp,

"destination_port": req.DestinationPort,

"process_name": req.ProcessName,

"hostname": req.Hostname,

"labels": req.Labels,

}

// Evaluate against OPA policies

results, err := a.opa.Query(context.Background(), rego.Query{

Query: "data.riptides.socket.allow",

Input: input,

})

if err != nil {

log.Errorf("Policy evaluation failed: %v", err)

return &PolicyEvaluationResponse{

Decision: PolicyEvaluationResponse_DENY,

Reason: "evaluation_error",

}

}

// Process results

if len(results) > 0 && results[0].Expressions[0].Value == true {

return &PolicyEvaluationResponse{

Decision: PolicyEvaluationResponse_ALLOW,

CacheTtl: 300, // 5 minutes

}

}

return &PolicyEvaluationResponse{

Decision: PolicyEvaluationResponse_DENY,

Reason: "policy_denied",

}

}The Benefits of the New Architecture

Reliability and Stability

Moving policy evaluation to user-space immediately improved system stability:

- No more kernel panics: Policy bugs can't crash the kernel

- Better error handling: Graceful degradation when policies fail

- Easier debugging: Standard Go debugging tools work perfectly

- Improved testing: Unit tests, integration tests, and fuzzing all become straightforward

Maintainability

The new architecture is much more maintainable:

- Standard OPA: No custom WASM runtime to maintain

- Pure Go: Leverages Go's excellent ecosystem and tooling

- Simpler deployment: Policy updates don't require kernel module changes

- Better observability: Rich metrics and logging capabilities

- Reduced complexity: Previously, we needed to augment processes in kernel space, which required additional message passing to user-space for policy evaluation. Now all process context gathering and policy evaluation happens in the agent, eliminating this complexity

Performance Characteristics

While we lost the ultra-low latency of kernel-space evaluation, the performance is still excellent:

- Sub-millisecond evaluation: Most policies evaluate in 200-500 microseconds

- Efficient caching: Results are cached in kernel space for subsequent connections

- Batching optimization: Multiple policy requests can be batched together

- Async evaluation: Non-blocking policy evaluation for better throughput

Security Improvements

The security posture actually improved:

- Reduced attack surface: Kernel module is much simpler and focused

- Principle of least privilege: Policy evaluation runs in user-space with limited privileges

- Better isolation: Policies are isolated from kernel and from each other

- Easier auditing: Policy changes are easier to review and audit

Performance Characteristics

The performance comparison revealed interesting insights:

Kernel WASM (wasm3 interpreter):

- Lower latency due to no context switching

- But interpreted execution was slower than expected

- Memory overhead from WASM linear memory allocation

- Complex garbage collection in kernel space

User-space OPA (compiled Go binary):

- Higher latency due to kernel-userspace communication

- But much faster policy execution due to compiled code

- More efficient memory usage

- Better CPU utilization

Surprisingly, the compiled OPA evaluation in Go was significantly faster than the interpreted WASM execution, even accounting for the overhead of kernel-userspace communication. The user-space approach provides more than adequate performance for real-world workloads, especially with effective caching strategies.

Lessons Learned

1. Complexity Has a Cost

Running WASM in kernel space was technically impressive, but the complexity cost was enormous. The maintenance burden, debugging difficulty, and stability issues far outweighed the performance benefits.

2. The Kernel Should Stay Simple

Kernels should focus on what they do best: resource management, scheduling, and hardware abstraction. Complex business logic like policy evaluation is better suited for user-space.

3. Performance Isn't Everything

While the kernel WASM approach was faster, the user-space approach provides better overall system characteristics: reliability, maintainability, debuggability, and security.

4. Caching Changes Everything

Effective caching in the kernel module means that the higher latency of user-space evaluation only affects the first request. Subsequent requests to the same destination are served from cache with microsecond latency.

5. Protobuf + nanopb Works Great

The combination of protobuf for schema definition and nanopb for efficient kernel-space encoding/decoding provides an excellent balance of performance and maintainability.

Living with the New Architecture

Six months into production with our user-space architecture, we're genuinely happy with the decision. The system feels solid in ways that our kernel WASM implementation never quite achieved.

From an operational perspective, policy deployments have become trivial. When we need to update a policy, we simply push new Rego files to our policy repository, and OPA picks them up automatically. No kernel module recompilation, no system restarts, no careful coordination between kernel and user-space components. Our security team can iterate on policies independently, testing them in development environments before rolling them out to production.

The observability story has also dramatically improved. We now have rich metrics showing policy evaluation times, decision distributions, and error rates. When a policy behaves unexpectedly, we can trace through the decision logic with standard debugging tools rather than trying to decipher kernel logs. Our compliance team particularly appreciates the detailed audit trails - every policy decision is logged with full context, making security reviews straightforward.

Perhaps most importantly, the system degrades gracefully under load or when things go wrong. If our agent process crashes or becomes unresponsive, the kernel module falls back to cached decisions or configurable default behaviors. With the WASM approach, a runtime error could potentially take down the entire kernel module.

The development velocity gains have been substantial as well. New team members can contribute to policy logic using familiar Go tooling and testing frameworks. We can run comprehensive test suites against our policy logic, something that was nearly impossible with the kernel WASM approach. Policy authors and kernel developers can work independently, which has eliminated many coordination bottlenecks.

Looking ahead, we're exploring some interesting optimizations enabled by the user-space approach. We're experimenting with machine learning models to predict policy decisions before they're requested, pre-warming caches for better performance. We're also investigating batch policy evaluation for workloads with predictable connection patterns. These kinds of sophisticated optimizations would have been extremely difficult to implement safely in kernel space.

Conclusion

The journey from kernel-space WASM to user-space OPA evaluation taught us that impressive technical achievements don't always make the best engineering decisions. While running WASM in the kernel was a fascinating technical challenge, the user-space approach provides better overall system characteristics for a production security platform.

Key takeaways for anyone considering similar architectural decisions:

- Measure total cost of ownership, not just raw performance

- Prioritize reliability and maintainability over theoretical performance gains

- Use the right tool for the job - kernels for kernel tasks, user-space for complex logic

- Design for operations - consider debugging, monitoring, and updates from day one

- Caching can bridge performance gaps between different architectural approaches

The Riptides platform is now more reliable, maintainable, and secure than ever before. Sometimes the best technical decision is to choose the boring, well-understood solution over the exciting, cutting-edge one.

Interested in how our Linux kernel journey unfolded and what we learned along the way?

- Rethinking Workload Identity at the Kernel Level

- Riptides: Kernel-Level Identity and Security Reinvented

- From Breakpoints to Tracepoints: An Introduction to Linux Kernel Tracing

- From Tracepoints to Metrics: A journey from kernel to user-space

- Seamless Kernel-Based Non-Human Identity with kTLS and SPIFFE

- Linux kernel module telemetry: beyond the usual suspects

- From Tracepoints to Prometheus: the journey of a kernel event to observability

If you enjoyed this post, follow us on LinkedIn and X for more updates. If you’d like to see Riptides in action, get in touch with us for a demo.

Ready to replace secrets

with trusted identities?

Build with trust at the core.