Supercharge Kafka security with Riptides

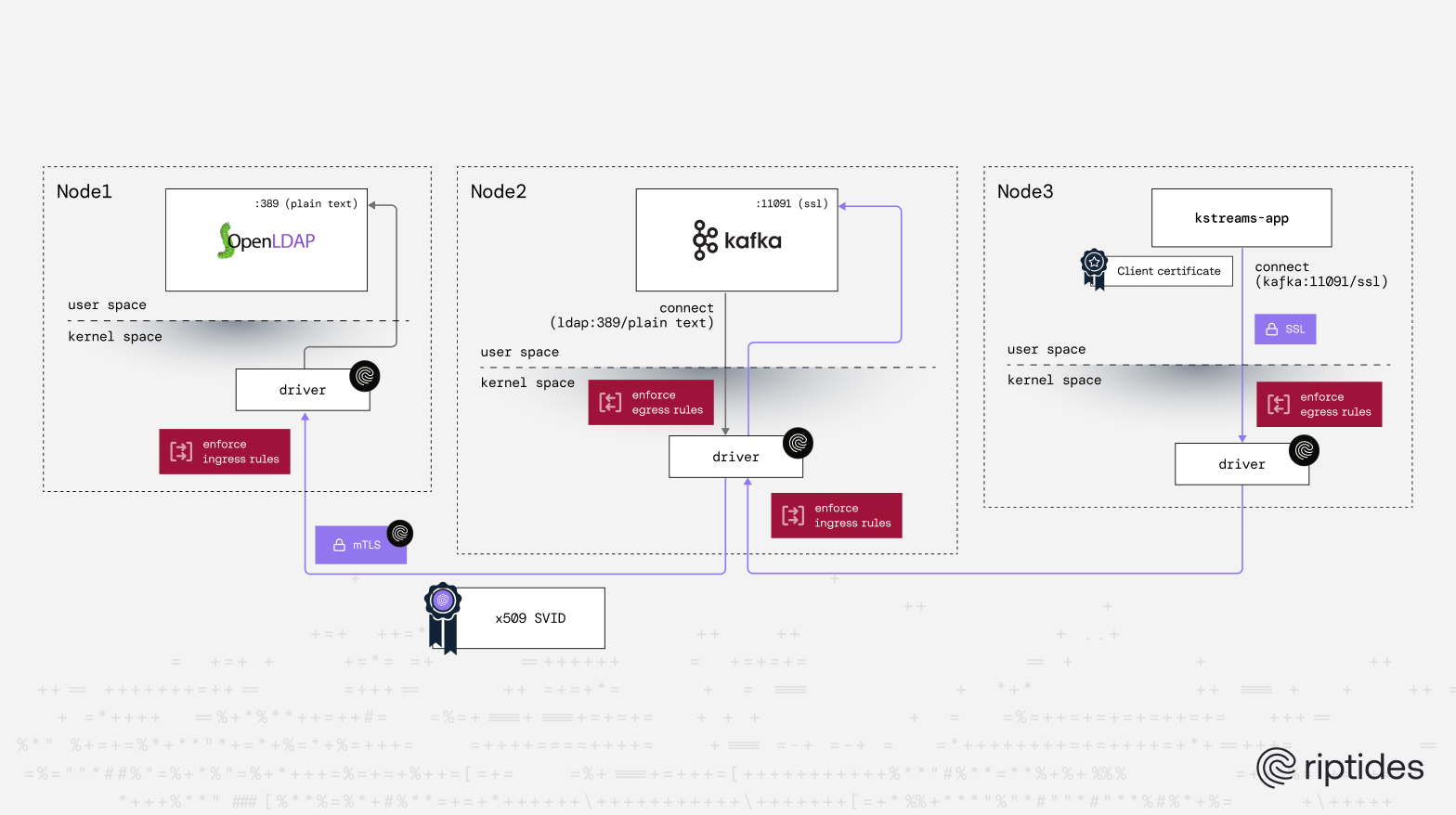

Riptides uses an identity first security model built on SPIFFE workload identities. This eliminates static secrets, service accounts, and manual certificate management. Each process receives a verifiable identity at the kernel level, and all network traffic is authenticated based on that identity. Because Riptides operates at the kernel level, it integrates seamlessly into existing workloads with no code or configuration changes. Zero-trust, secretless authentication, and automated credential management remove the error-prone steps of storing, distributing, and handling secrets—steps that are often the source of leaks and operational headaches.

In this post, we’ll show how these principles apply to a real-world system: Apache Kafka, and the Confluent Platform Demo running on Kubernetes. It’s a good example because the demo has multiple interconnected components communicating over:

- plain-text, TLS, and mTLS channels,

- across protocols like HTTP, LDAP, and Kafka,

- and using authentication methods ranging from no-auth and Basic Auth to OAuth bearer tokens, SASL, and mTLS client certificates.

1. Assigning workload identities

The first step in using Riptides is to define workload identities for every process, including health checks, init containers, setup scripts, and long-running services. A workload identity is built from process metadata. The Riptides Driver, a Linux kernel module, matches the metadata of any process initiating or accepting network connections against the configured workload identities. When a match is found, Riptides issues a certificate (an x509 SVID) containing the SPIFFE workload identity and attaches it to the process and its network traffic.

To explore workload identities in more depth, see:

- Introduction to SPIFFE: Secure Identity for Workloads

- The Critical Role of Unique Workload Identity in Modern Infrastructure

- Workload Attestation and Metadata Gathering: Building Trust from the Ground Up

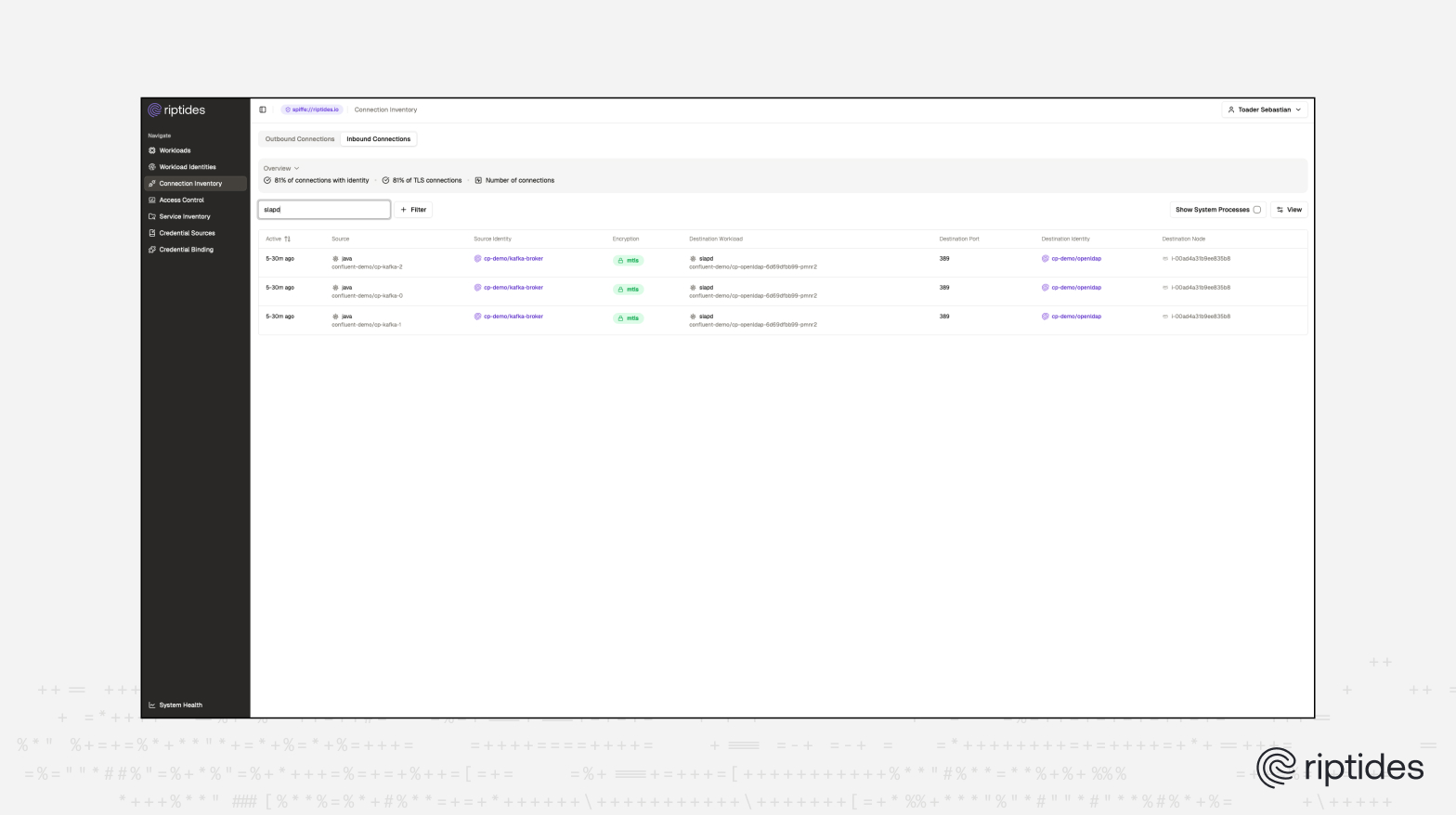

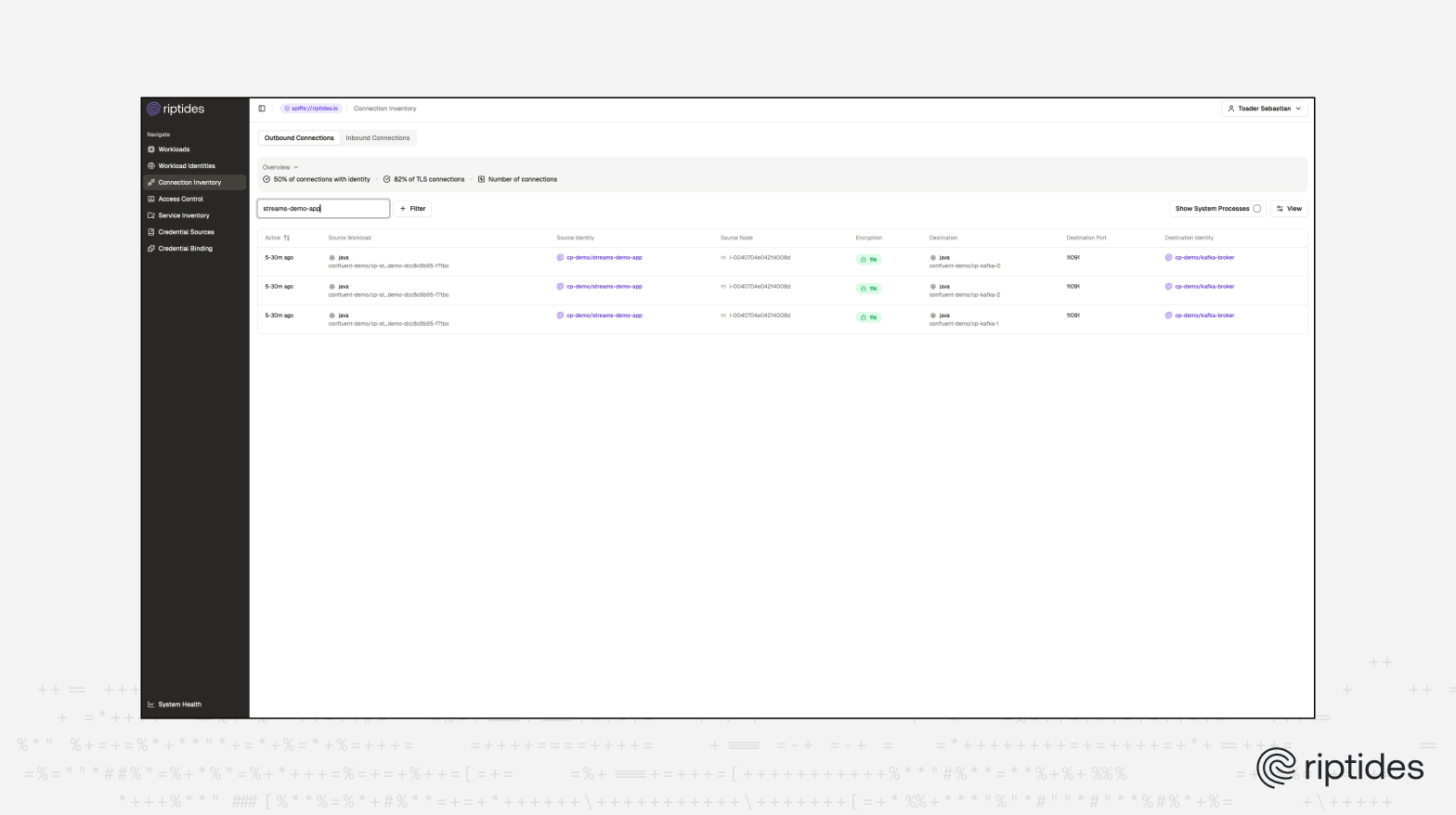

The Riptides UI is especially useful here. It shows all processes generating network traffic, along with their metadata, giving a full map of inbound and outbound connections. You can see which workloads are talking to each other, both within the datacenter and to external sources, whether the communication is encrypted, and whether secrets or authentication are involved. Riptides operates in the Linux kernel, collecting full telemetry on every inbound and outbound connection in real time. With this visibility, you can assign workload identities, enforce policies, and govern communications across the environment with precision.

Here is a snippet illustrating how workload identities are defined for the Kafka and LDAP components:

apiVersion: core.riptides.io/v1alpha1

kind: WorkloadIdentity

metadata:

name: cp-demo-kafka-broker

namespace: riptides-system

spec:

scope:

agentGroup:

id: riptides/agentgroup/eu-west-1-all-os

selectors:

- k8s:container:name: kafka

k8s:label:app.kubernetes.io/component: kafka

process:name: java

workloadID: cp-demo/kafka-brokerThis configuration assigns the cp-demo/kafka-broker workload identity to any java process running in a container named kafka within a pod labeled app.kubernetes.io/component: kafka. When such a process initiates or receives network traffic, Riptides issues an x509 SVID with the SPIFFE ID: spiffe://riptides.io/cp-demo/kafka-broker. In this case, riptides.io is the SPIFFE trust domain configured in the Riptides Control Plane.

apiVersion: core.riptides.io/v1alpha1

kind: WorkloadIdentity

metadata:

name: cp-demo-openldap

namespace: riptides-system

spec:

scope:

agentGroup:

id: riptides/agentgroup/eu-west-1-all-os

selectors:

- k8s:container:name: openldap

k8s:label:app.kubernetes.io/component: openldap

process:name: slapd

workloadID: cp-demo/openldapThis configuration assigns the cp-demo/openldap workload identity to any slapd process running in a container named openldap within a pod labeled app.kubernetes.io/component: openldap.

2. Securing plain-text communication

Some of the Confluent demo components communicate in plain text. For example, Kafka's Metadata Service (MDS) communicates with LDAP over unencrypted port 389:

# Configure MDS to talk to AD/LDAP

KAFKA_LDAP_JAVA_NAMING_FACTORY_INITIAL: com.sun.jndi.ldap.LdapCtxFactory

KAFKA_LDAP_COM_SUN_JNDI_LDAP_READ_TIMEOUT: 3000

KAFKA_LDAP_JAVA_NAMING_PROVIDER_URL: ldap://openldap:389

With Riptides, this traffic is transparently upgraded from plain text to mTLS using the following workload identity configuration:

apiVersion: core.riptides.io/v1alpha1

kind: Service

metadata:

name: cp-demo-openldap

namespace: riptides-system

spec:

addresses:

- address: cp-openldap.confluent-demo.svc.cluster.local

port: 389

labels:

app: openldap

---

apiVersion: core.riptides.io/v1alpha1

kind: WorkloadIdentity

metadata:

name: cp-demo-kafka-broker

namespace: riptides-system

spec:

scope:

agentGroup:

id: riptides/agentgroup/eu-west-1-all-os

selectors:

- k8s:container:name: kafka

k8s:label:app.kubernetes.io/component: kafka

process:name: java

workloadID: cp-demo/kafka-broker

allowedSPIFFEIDs:

inbound:

- spiffe://riptides.io/cp-demo/kafka-broker

egress:

- connection:

tls:

mode: MUTUAL

selectors:

- app: openldap

allowedSPIFFEIDs:

- spiffe://riptides.io/cp-demo/openldap

---

apiVersion: core.riptides.io/v1alpha1

kind: WorkloadIdentity

metadata:

name: cp-demo-openldap

namespace: riptides-system

spec:

scope:

agentGroup:

id: riptides/agentgroup/eu-west-1-all-os

selectors:

- k8s:container:name: openldap

k8s:label:app.kubernetes.io/component: openldap

process:name: slapd

workloadID: cp-demo/openldap

allowedSPIFFEIDs:

inbound:

- spiffe://riptides.io/cp-demo/kafka-broker

- spiffe://riptides.io/cp-demo/openldap

ingress:

- connection:

tls:

mode: MUTUAL

port: 389

This configuration ensures that when processes with cp-demo/kafka-broker workload id connects to the openldap service on port 389 is done via mTLS.

Riptides automatically uses the x509 SVID certificates generated for workloads to enforce mTLS at the kernel layer. It also applies ingress and egress rules, ensuring only permitted workloads communicate.

The screenshot below shows the final state after Riptides has transparently upgraded all plaintext Kafka connections to LDAP into mTLS at the kernel layer.

3. Allowing existing TLS/mTLS traffic to pass through

If a component already uses TLS or mTLS, Riptides can operate in pass-through mode. The traffic remains unchanged, but identities are still assigned and communication rules enforced. For example, the kstreams-app component performs mTLS authentication with Kafka. Riptides allows the original client certificate to pass through:

apiVersion: core.riptides.io/v1alpha1

kind: Service

metadata:

name: cp-demo-kafka-cluster-encrypted-listeners

namespace: riptides-system

spec:

addresses:

- address: cp-kafka.confluent-demo.svc.cluster.local

port: 10091 # SASL_SSL listener with OAuth/token authentication

- address: cp-kafka.confluent-demo.svc.cluster.local

port: 11091 # SSL listener with client certificate authentication

- address: cp-kafka.confluent-demo.svc.cluster.local

port: 8091 # Metadata Service (MDS) https listener

labels:

app: kafka-cluster-encrypted-listeners

---

apiVersion: core.riptides.io/v1alpha1

kind: Service

metadata:

name: cp-demo-kafka-broker-0-encrypted-listeners

namespace: riptides-system

spec:

addresses:

- address: cp-kafka-0.cp-kafka-headless.confluent-demo.svc.cluster.local

port: 10091 # SASL_SSL listener with OAuth/token authentication

- address: cp-kafka-0.cp-kafka-headless.confluent-demo.svc.cluster.local

port: 11091 # SSL listener with client certificate authentication

- address: cp-kafka-0.cp-kafka-headless.confluent-demo.svc.cluster.local

port: 8091 # Metadata Service (MDS) https listener

labels:

app: kafka-broker-0-encrypted-listeners

---

apiVersion: core.riptides.io/v1alpha1

kind: WorkloadIdentity

metadata:

name: cp-demo-kafka-broker

namespace: riptides-system

spec:

scope:

agentGroup:

id: riptides/agentgroup/eu-west-1-all-os

selectors:

- k8s:container:name: kafka

k8s:label:app.kubernetes.io/component: kafka

process:name: java

workloadID: cp-demo/kafka-broker

allowedSPIFFEIDs:

inbound:

- spiffe://riptides.io/cp-demo/kafka-broker

ingress:

- allowedSPIFFEIDs:

- spiffe://riptides.io/cp-demo/kafka-connect

- spiffe://riptides.io/cp-demo/streams-demo-app

connection:

tls:

mode: PERMISSIVE

port: 11091

egress:

- connection:

tls:

mode: MUTUAL

selectors:

- app: openldap

allowedSPIFFEIDs:

- spiffe://riptides.io/cp-demo/openldap

---

apiVersion: core.riptides.io/v1alpha1

kind: WorkloadIdentity

metadata:

name: cp-demo-streams-demo-app

namespace: riptides-system

spec:

scope:

agentGroup:

id: riptides/agentgroup/eu-west-1-all-os

workloadID: cp-demo/streams-demo-app

egress:

- allowedSPIFFEIDs:

- spiffe://riptides.io/cp-demo/kafka-broker

selectors:

- app: kafka-cluster-encrypted-listeners

- app: kafka-broker-0-encrypted-listeners

- app: kafka-broker-1-encrypted-listeners

- app: kafka-broker-2-encrypted-listeners

- allowedSPIFFEIDs:

- spiffe://riptides.io/cp-demo/schema-registry

selectors:

- app: schema-registry

selectors:

- k8s:container:name: streams-demo

k8s:label:app.kubernetes.io/component: streams-demo

process:name: java

This configuration shows that the streams-demo Java application is permitted to initiate connections only to the cp-demo/schema-registry and cp-demo/kafka-broker workloads. The identity it presents when initiating these connections is cp-demo/streams-demo-app.

On the server side, the cp-demo/kafka-broker workload is configured to accept connections on port 11091 only from the cp-demo/kafka-connect and cp-demo/streams-demo-app workloads in PERMISSIVE mode.

PERMISSIVE mode means that TLS or mTLS is established by the workloads in user space, rather than by Riptides in the kernel space.

For brevity, this post includes only configuration snippets that illustrate the concepts; the full configuration for the demo application is longer and not shown here. Nevertheless, if you are interested in a full demo, please get in touch with us.

The screenshot below shows that Riptides detects and allows an existing TLS connection between the streams demo app and kafka to pass through unchanged, since the workloads already establish a secure channel on their own:

4. Just-in-time secret injection on the wire

Some components use Basic Auth for health checks. For example, the Schema Registry health check is configured like this:

curl --user schemaregistryUser:schemaregistryUser --fail --silent --insecure https://cp-schemaregistry.confluent-demo.svc.cluster.local:8085/subjects --output /dev/null || exit 1With Riptides, credentials don’t need to be stored in config files, environment variables, or command-line flags. Instead, they’re injected directly into the network stream at the kernel level, completely transparently to the application. We won’t dive into the internals here — those are covered in detail in the linked posts below - but this shows how secretless authentication works in practice for this demo.

For a deeper exploration of the mechanism and its security implications, see:

- On-the-Wire Credential Injection: Secretless AWS Bedrock Access example

- On demand credentials – Secretless AI assistant example on GCP

- Workload Identity Without Secrets: a Blueprint for the Post-Credential Era

- Shai-Hulud 2.0: A Technical Breakdown and Why Secrets Need to Die

5. Simplifying Kafka client configuration

So far, the Confluent demo required no changes, all security upgrades were handled purely via Riptides configuration.

To fully remove secrets from client workloads, Kafka clients are reconfigured to connect in plain-text mode. Riptides then transparently upgrades all traffic to mTLS at the kernel level, keeping secrets out of userspace while ensuring encryption and authentication.

This eliminates keystores, truststores, and client-side credentials, reducing operational overhead and the risk of leaks. Servers only need to trust the Riptides CA to validate client certificates.

6. Truststore and keystore files locked to server workloads

Certain server-side features, such as Kafka extracting principals from client certificates still rely on keystores and truststores. With Riptides, these files are dynamically generated, updated, and locked down.. Riptides provides each Kafka broker with its own truststore and keystore via sysfs, ensuring that only the Kafka workload can read them. To use these files, Kafka simply needs to be reconfigured to point to the sysfs paths instead of its original keystore and truststore locations.

Because Riptides manages these files automatically, it handles the full lifecycle:

- Rotates certificates when the originals are updated

- Adds new Certificate Authorities (CAs) as needed

- Ensures that Kafka always has up-to-date, valid trust material without manual intervention

This approach eliminates the operational burden of managing keystores/truststores while maintaining strong security guarantees for the Kafka server.

Key takeaways

Riptides lets teams secure both new and existing workloads without adding operational complexity. By enforcing zero-trust principles, enabling secretless communication, and automating secret and certificate management, it eliminates the traditional pain points and risks of manual credential handling.

All workloads are uniquely identified at the kernel level, ensuring no unidentified or rogue process can communicate. Existing applications continue to operate with minimal or no changes, while security is applied consistently across the environment.

In short, Riptides makes identity-first, automated security the default, reducing operational risk, simplifying compliance, and letting teams focus on building and running applications rather than managing secrets.

If you enjoyed this post, follow us on LinkedIn and X for more updates. If you’d like to see Riptides in action, get in touch with us for a demo.

Ready to replace secrets

with trusted identities?

Build with trust at the core.