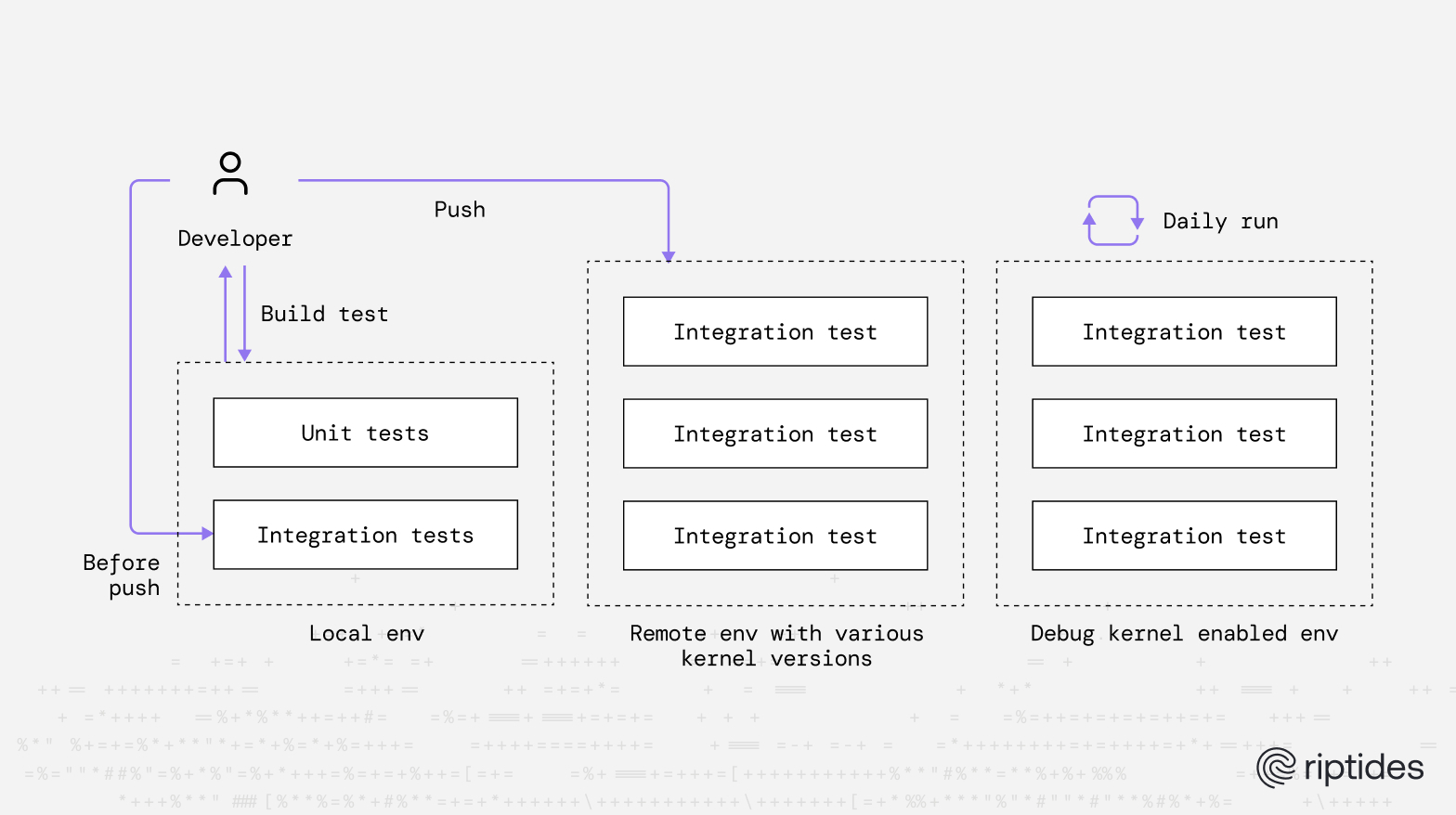

Introduction: Reliability at the Core

At Riptides, reliability is not an add-on; it is the constraint that drives how we build and ship kernel-level tooling. Testing defines our development process because the module operates inside the Linux kernel, intercepting networking flows and attaching SPIFFE-based workload identities. At this level of privilege, even minor regressions can translate into unpredictable system behaviour. Rigorous validation is therefore non-negotiable.

In earlier posts, we highlighted features and user experience. Here, we focus on how we ensure correctness across the entire lifecycle: from isolated logic checks to full system-level validation across distributions, kernel versions, vendor patches, and cloud backports. What follows is a practical overview of the testing layers that keep the module predictable and safe in every supported environment.

Unit Tests Inside the Code

Our first validation layer lives directly in the codebase. When compiled with the TEST build flag, the module exposes a suite of internal unit tests that verify core logic during build time. These checks are intentionally small, fast, and isolated, allowing us to detect regressions long before they reach integration testing or any release pipeline. Kernel-module development leaves little room for assumptions. There are no external libraries to fall back on, and missing functionality must be implemented in-kernel. This makes tight unit-level validation essential: it ensures that utility functions, data structures, and protocol logic behave exactly as expected before they ever interact with the broader system.

Infrastructure Testing with Bats

Once local checks pass, the code enters our infrastructure test suite. This layer validates real system behaviour: module loading, error paths, boundary conditions, and interactions with the underlying OS. One of the earliest lessons was that “Linux is not Linux”. Differences across distributions, kernel versions, vendor patches, and cloud-provider backports are substantial, some kernels ship with patch levels above 1500. Broad, repeated, multi-distro coverage is the only reliable way to match the environments our customers actually run.

A recurring challenge in kernel module testing is provisioning and tearing down environments in a consistent, automated way. Because the tests must interact directly with the kernel, the most natural control surface is the shell. After evaluating several options, we standardised on Bats (Bash Automated Testing System), a TAP-compliant framework that automates repetitive shell-driven testing tasks and provides a maintainable structure for system-level validation. It execute commands exactly as a user or CI job would, thus is a strong fit for configuring, loading, and inspecting kernel modules.

Bats alone solves test orchestration, but meaningful validation requires expressive assertions. The surrounding ecosystem: bats-assert, bats-file, and other community extensions provides the tooling needed to check command outcomes, inspect filesystem state, and verify expected output. Because the framework is open source and simple to extend, we can adapt it when kernel specific edge cases require custom logic. Together, these components give us a maintainable, shell-native test environment that scales well across kernels, distributions, and CI pipelines.

Why Bats Works for Us

Bats fills an important gap in our workflow: it behaves like an enhanced Bash environment, close enough to manipulate kernel modules directly, yet structured enough to support a large, repeatable integration suite. It offers:

- A natural workflow for integration tests built around kernel modules

- Lifecycle hooks (

setup,setup_file,teardown,teardown_file) that keep repetitive work organized - A mature, well tested tool trusted by a broad community

- Strong helpers from

bats-assertandbats-filefor reliable, expressive checks - Well structured, easy to follow documentation

Where Bats Falls Short

No tool is perfect. In our environment, the limitations of Bash still apply:

- Bash is a constrained programming language

- Debugging failures can be slower than in more expressive test frameworks

- Buffered output can obscure logs or cause inconsistencies

- No built-in fail-fast behaviour

We design our test suite with these constraints in mind and apply compensating patterns when necessary.

Bats inherits both the strengths and the constraints of Bash. In practice, this means:

- Bash is a constrained programming language

- Debugging failures can be slower than in more expressive test frameworks

- Buffered output can obscure logs or cause inconsistencies

- No built-in fail-fast behaviour

We design test patterns and supporting utilities around these constraints, ensuring the suite remains readable, deterministic, and maintainable even within Bash’s boundaries.

A Note on TAP and Why It Matters

Bats outputs results in TAP (Test Anything Protocol), a long-standing, interoperability focused, and language agnostic format widely used across UNIX tooling. TAP’s line-oriented output structure makes test results straightforward to parse, aggregate, and integrate into CI pipelines, log processors, and external dashboards without custom adapters.

This uniformity matters in a kernel testing workflow. TAP allows us to correlate Bats results with kernel logs, system trace data, dmesg output, and CI metadata using the same parsing logic end-to-end. When investigating failures, especially those involving timing, concurrency, or subtle kernel–user-space interactions, having consistent, interoperable test output significantly reduces the debugging surface area.

We also covered the kernel-level debugging tools we rely on in a previous post, and TAP integrates cleanly with those workflows as well.

Testing Metrics

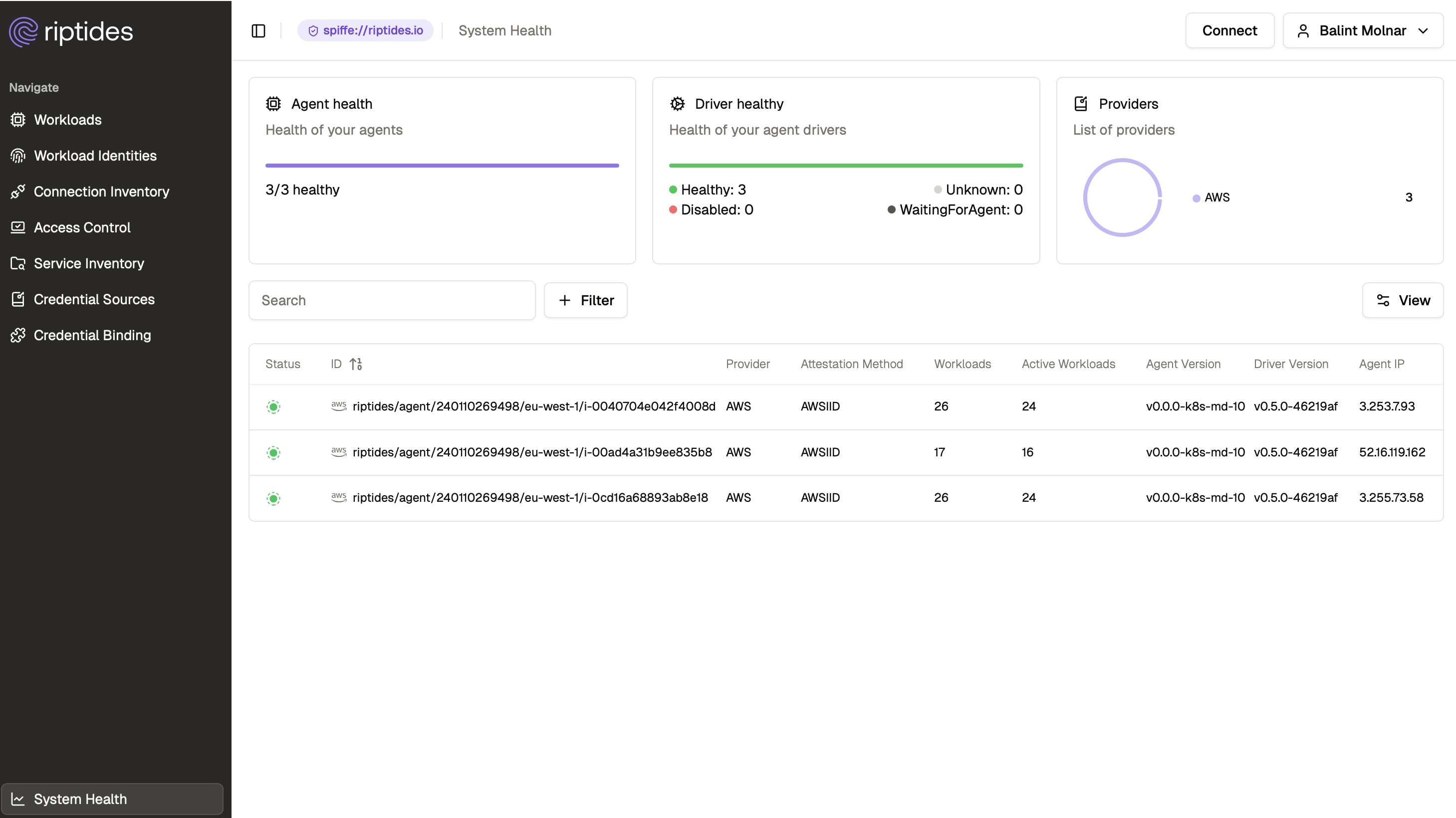

Metrics form a critical contract between the kernel module and the user-facing components of our platform. Because our UI relies heavily on these metrics for visibility, troubleshooting, and behavioural analysis, validating their correctness is a core part of the infrastructure test suite.

We’ve extensively blogged about how we leverage kernel-level metrics for testing and for attaching SPIFFE-based identities to workloads, forming a critical part of our validation pipeline.

To ensure reliability, we run a lightweight in-house metrics exporter that retrieves the module’s current metric output during testing. The exported data is then inspected using bats-file, which allows us to perform precise, file-level assertions on the retrieved payload. For example, assert_file_contains helps us verify that required metric fields appear exactly as expected, confirm that naming conventions remain stable, and ensure that values follow the correct formats.

This layer of testing catches issues that structural tests alone cannot, such as missing fields, unexpected formatting changes, or regressions in counter behaviour. By combining live metric retrieval with file-based assertions, we validate not only that the module emits metrics, but that it emits the metrics our platform and our customers depend on.

Daily Debug-Kernel Validation

Once per day, we run the full infrastructure suite against a debug kernel configured with instrumentation, designed to reveal subtle system-level defects. These kernels enable detection of memory-safety issues, race conditions, lock-ordering bugs, and other deep failures that rarely surface under normal runtime conditions.

The primary tools we rely on include:

KASAN (Kernel Address Sanitizer)

KASAN instruments memory accesses and detects out-of-bounds reads/writes, use-after-free conditions, and other memory-safety violations. Its fault reports frequently expose latent bugs that are extremely difficult to trigger outside an instrumented environment.

kmemleak

kmemleak performs a reachability-based scan of the kernel heap to identify allocations that are no longer referenced. While not a real-time leak detector, it reliably surfaces leaks that may not crash a system immediately but would gradually erode stability or memory availability over extended workloads.

KFENCE (Kernel Electric Fence)

KFENCE offers low-overhead memory corruption detection suitable for long-running or production-like tests. While its detection set is narrower than KASAN’s, its minimal runtime cost allows us to catch subtle out-of-bounds accesses and invalid writes during workloads that would be impractical under KASAN’s heavy instrumentation.

lockdep

lockdep monitors lock acquisition paths and validates ordering rules across kernel synchronization primitives. It detects deadlock risks, incorrect lock nesting, lock inversion, and other concurrency issues that only appear under specific timing or load conditions.

The purpose of this daily run is not merely achieving a clean “all tests passed.” The debug-kernel environment examines memory usage, synchronization behaviour, concurrency boundaries, and error paths with far greater scrutiny than any standard configuration. These checks are executed across all kernel versions and distributions in use by our customers, ensuring long-term reliability and consistent behaviour.

We’ve previously blogged in detail about the kernel-level debug tooling we use to uncover memory, concurrency, and system-level issues: Practical Linux Kernel Debugging: From pr_debug() to KASAN/KFENCE

A Glimpse Into a Real Bats Test

To make the testing approach more concrete, here is a small, self-contained example, demonstrating how a typical Bats test is structured. It shows lifecycle hooks, command execution, and basic assertions using bats-assert and bats-file.

load 'test_helper/bats-support/load.bash'

load 'test_helper/bats-assert/load.bash'

load 'test_helper/bats-file/load.bash'

setup() {

# Prepare environment for the test

touch "$BATS_TEST_TMPDIR/example.txt"

echo "hello world" > "$BATS_TEST_TMPDIR/example.txt"

}

teardown() {

# Clean up after each test

rm -f "$BATS_TEST_TMPDIR/example.txt"

}

@test "example file contains expected text" {

run cat "$BATS_TEST_TMPDIR/example.txt"

assert_success

assert_output --partial "hello world"

# Use bats-file for file-level assertions

assert_file_contains "$BATS_TEST_TMPDIR/example.txt" "hello"

}This example highlights the workflow we rely on across the wider suite:

- setup/teardown ensure test isolation

- commands are executed exactly as they would in a shell

- bats-assert validates output and return codes

- bats-file inspects file contents with precise assertions

Even in more complex scenarios as metrics validation, kernel interactions, error paths, etc, the same structure scales predictably and reliably.

Conclusion

Testing at Riptides is not a checkbox exercise, it is a layered system of safeguards that ensures correctness at every stage of development. Unit tests verify core foundational logic, Bats-driven infrastructure tests validate real-world behaviour across kernels and distributions, and daily debug-kernel runs surface subtle memory, concurrency, and ordering issues that only appear under stress.

By combining these layers, we maintain high confidence that both the kernel module and the broader platform behave predictably, safely, and consistently across all supported environments. As the product evolves, the test infrastructure evolves with it, keeping reliability a defining characteristic of the Riptides stack.

If you enjoyed this post, follow us on LinkedIn and X for more updates. If you’d like to see Riptides in action, get in touch with us for a demo.

Ready to replace secrets

with trusted identities?

Build with trust at the core.